Technology Insights

Microservices Guide: Detecting Data Mismatches

By Abid Khan / April 9, 2025

In this article:

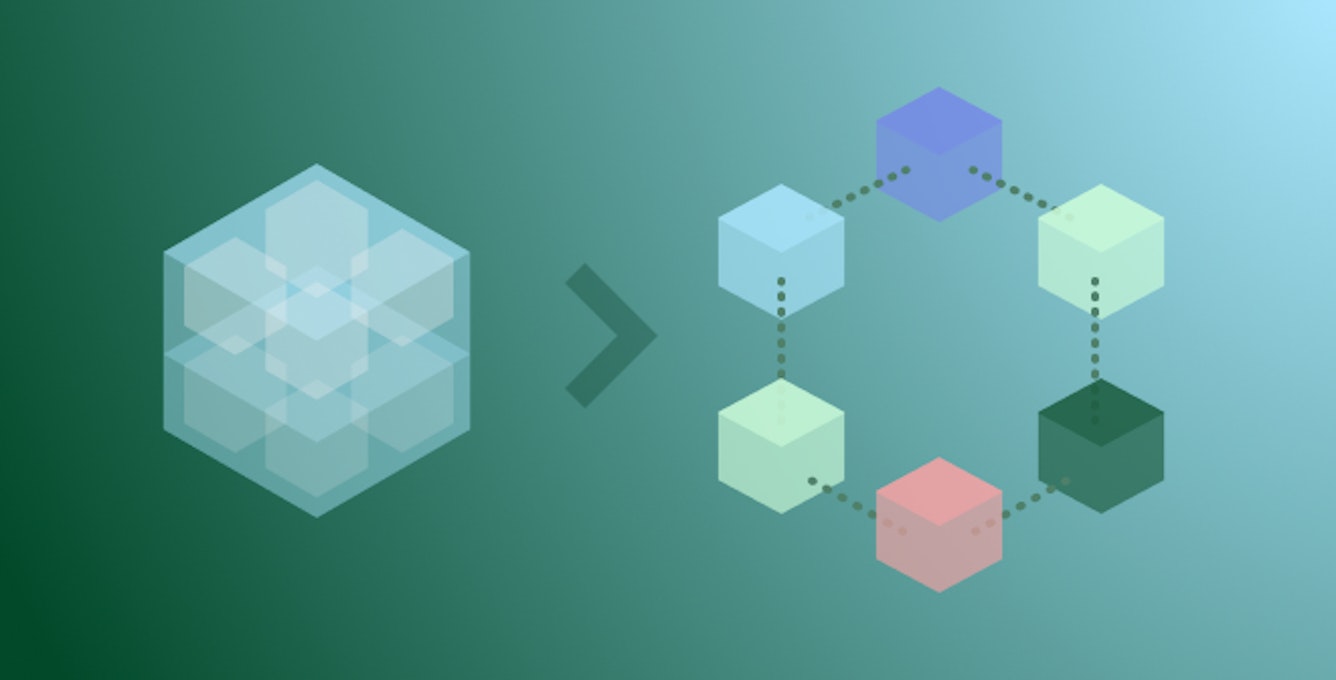

To develop testable, scalable software systems, many organizations are adopting microservices architecture that involves breaking down monolithic applications into independent, domain-specific services. This enables organizations to create more modular and maintainable systems. Since these microservices operate in isolation, they each employ distinct transaction management techniques that suit their requirements. Some transactions may follow an immediate commit pattern, ensuring that data is persisted instantly. Others may adopt an eventual consistency model, where the transaction is processed asynchronously, leading to a slight delay before the data is fully committed.

What is a microservices architecture?

A microservices architecture is a software design approach where an application is structured as a collection of small, independent services. Each service focuses on a specific business capability and communicates with other services through APIs (application programming interfaces). Think of it like building with LEGO bricks: Each brick is independent and serves a specific purpose, but combined, they create a larger, more complex structure. Examples might include separate services for a product catalog, (which manages product information), a shopping cart (handles adding and removing items), or order processing (processes orders and payments).

The challenge of maintaining data consistency

This asynchronous nature of microservice architectures introduces complexity to any system because data consistency across different services isn't immediately guaranteed. Detecting data inconsistencies in decoupled systems is crucial for reliable operations. In distributed microservices environments, transactional calls can sometimes experience timeouts due to network congestion, infrastructure limitations, or varying service latencies. Such timeouts can lead to partial transaction completion, where one service commits a change while another fails to do so, creating a state mismatch between the interacting services. The challenge of maintaining data consistency is further exacerbated when multiple transaction services interact asynchronously over a network. These inconsistencies can lead to operational inefficiencies, and incorrect business decisions if not managed properly.

Detecting and mitigating these inconsistencies becomes more complex when dealing with large volumes of data and when the interacting services are not within the same network cluster. This situation becomes even more challenging when one of the interacting services is an external third-party system, such as a partner or vendor platform enforcing its rate-limiting constraints, stricter API quotas, or higher latency. These factors further contribute to the unpredictability of transaction processing, increasing the likelihood of data mismatches.

Like many organizations, the AppDirect engineering team encountered data inconsistencies between our internal systems and Microsoft's platform. In this blog, we explore an event-driven approach to proactively identify, report, and reconcile transactional state mismatches between internal systems and external services, like Microsoft, ensuring data integrity in microservices architectures. By resolving data inconsistencies in decoupled systems, we can effectively maintain data integrity in the AppDirect B2B commerce platform.

How to identify mismatches—Key steps

In our efforts to identify and mitigate state mismatches between the AppDirect system and external services, the engineering team undertook a comprehensive evaluation of multiple solutions. They explored and even implemented various approaches before finally settling on our current detection strategy. Two primary factors influenced our initial approach:

1. Sheer volume of data being processed: Processing a high volume of transactions between AppDirect and external systems necessitates a mismatch detection solution that can efficiently handle large datasets without causing bottlenecks.

2. Network latency associated with cross-system interactions: Communication delays between AppDirect and external systems are inherent, requiring a detection strategy that accounts for asynchronous operations and potential lags in data synchronization, ensuring accuracy despite latency challenges.

Given these constraints, the team determined they needed a method that was both efficient and scalable while ensuring minimal performance overhead on our systems.

Identifying a single ‘source of truth’ for data comparison

Instead of performing a full-scale comparison of all data records between our system and the external service, which would have been computationally expensive and time-consuming, we opted for an incremental approach. This involved dynamically generating a view of misaligned data as changes occurred. Specifically, whenever the state of a domain entity changed within the AppDirect system, the team compared the updated state with the corresponding record in the external system, which was referred to as the "source of truth."

This incremental validation technique helped the team quickly identify and isolate inconsistencies. It makes it significantly easier to trace back to the faulty code responsible for generating these state mismatches. Second, this method provided faster insights into misalignments, enabling us to address discrepancies in near real time.

Drawbacks to incremental validation

However, while this incremental approach proved efficient in many cases and helped us rectify specific inconsistencies, it had its limitations.

Lacking a holistic system view: Incremental validation often focuses on individual transactions or recent changes. This narrow approach misses inconsistencies that arise from the interaction of multiple operations or those rooted in older data, leading to a fragmented understanding of data integrity.

Historical, accumulated discrepancies over time: Small, undetected discrepancies can snowball into significant issues over time, especially in systems with continuous data updates. Without a mechanism to identify and reconcile these accumulated mismatches, business processes relying on accurate data can be impacted.

Adopting full-scale scanning to identify data inconsistencies

To address this shortcoming, we decided to move beyond incremental detection and implement a full-scale scanning and comparison mechanism. This approach involved performing a complete reconciliation of domain states between the two systems, ensuring that every entity's state was verified comprehensively. Given the scale and complexity of data, executing such an operation efficiently required a well-architected solution that could handle large volumes of data while minimizing the impact on system performance.

Read more about how AppDirect replaced our RabbitMQ layer with Kafka, in our blog 7 Steps to Replacing a Message Broker in a Distributed System. To achieve this, the AppDirect team implemented an independent process powered by a Kafka-based event pipeline. This pipeline was designed to efficiently handle asynchronous data processing and reconciliation at scale. The entire process was orchestrated using Kubernetes cron jobs, which allowed us to schedule periodic scans and comparisons in an automated fashion. By leveraging Kafka's event-driven architecture, the team ensured that state verification and reconciliation were performed reliably and in a distributed manner, reducing the likelihood of bottlenecks.

Want more details about pros, cons, and approaches for using Confluent's Kafka Data Streaming platform? Read our blog

Breaking down the architecture pipeline

Our pipeline consists of multiple independent steps, each designed to perform a specific function within the overall architecture. Given that the AppDirect platform follows a microservices-based approach, we have dedicated services responsible for handling distinct domain functions.

This architectural choice results in interactions between various services within our internal cluster, ensuring that different components of the system can operate independently while still contributing to the larger workflow. For a more detailed breakdown of each participating module and service, please refer to the Architecture section, below. That section includes a comprehensive overview of the system’s design and integration points.

Pipeline overview: Key processing stages

Our pipeline consists of a series of well-defined steps designed to retrieve, compare systematically, and process data discrepancies. The primary stages of this workflow include:

- Active Tenant Retrieval:

The pipeline begins by iterating over all active tenants in our platform.

For each tenant, we load the necessary access tokens that will be required for making authenticated network calls to external systems.

- Customer Data Fetching:

Once the access tokens are obtained, we proceed to fetch customer data from the external system (Microsoft, in this case).

This step involves querying the external system to retrieve all active customers associated with a given tenant.

- Subscription Data Collection:

After obtaining the list of active customers for a tenant, we fetch all associated subscription records from Microsoft.

These subscriptions represent the various products and services that customers have subscribed to through our platform.

- System Comparison:

In this step, we retrieve the corresponding subscription data stored in our own system.

A series of mismatch detection algorithms are then applied to compare the data retrieved from Microsoft against our internally stored records.

This ensures that any discrepancies—whether in subscription status, entitlements, or metadata—are identified accurately.

- Discrepancy Identification:

If any mismatches or inconsistencies are detected, a discrepancy event is generated.

Each event contains detailed information about the mismatched records, including the type of discrepancy (e.g., missing records, incorrect subscription status, data corruption, etc.).

- Downstream Processing & Reporting:

The generated discrepancy events are then published to a Kafka topic, ensuring that they are processed in a scalable and asynchronous manner.

A Kafka consumer service listens for these events and processes them accordingly.

Once processed, the discrepancy data is stored in a dedicated data warehouse schema, allowing for efficient querying and reporting.

Our data warehouse pipeline has been designed with genericity in mind. This means that other services can also use the same infrastructure to publish and report on their own data discrepancies.

A scalable and flexible architecture

The architecture has been designed with scalability, reusability, and flexibility as its core principles. This ensures that the solution not only meets the immediate requirements of state mismatch detection but also seamlessly integrates with other systems and services that may require similar validation mechanisms. By leveraging a Kafka-based event-driven pipeline, the system is inherently capable of handling large-scale data processing while maintaining high availability and fault tolerance.

What is a Kafka-based pipeline?

A Kafka-based event-driven pipeline is a distributed messaging system where services communicate asynchronously through events streamed via Apache Kafka. When a service performs an action, it publishes an "event" to Kafka. Other interested services subscribed to these events can then react accordingly, creating a loosely coupled and scalable architecture.

Key advantages

One key advantage of this architecture is its loosely coupled design, which allows different components to interact asynchronously without creating tight dependencies between services. This decoupling ensures that each service operates independently, enabling greater flexibility and easier maintenance. When a state mismatch is detected, the system ensures that records are eventually processed and reconciled without causing unnecessary performance bottlenecks or system failures.

The event-driven nature of the Kafka pipeline plays a crucial role in guaranteeing eventual consistency across distributed systems. Instead of relying on synchronous processing, where all operations must be completed in real time, the system asynchronously processes state mismatches. This design choice significantly improves system resilience, allowing it to handle network latencies, temporary service unavailability, and fluctuating workloads without disrupting overall operations.

Smooth business process & strong customer relationships

Given the inherently transactional nature of decoupled systems, data mismatches are unavoidable. As different services operate independently with their transaction management techniques, discrepancies in data states between interconnected systems are bound to occur over time. While we have already taken significant steps to rectify faulty code in the initial stages of development, we recognize that addressing individual issues is only part of the solution.

At present, the AppDirect team is leveraging this proactive approach to detect isolated inconsistencies and gain a comprehensive and holistic view of overall state discrepancies between our system and external services. This approach has several advantages:

This broader perspective enables us to go beyond reactive fixes and develop long-term strategies to mitigate potential mismatches before they impact critical business operations.

By continuously monitoring and analyzing these discrepancies, we can identify patterns and root causes to refine our system architecture and optimize data synchronization mechanisms.

Looking ahead, we plan to utilize the insights derived from this state mismatch detection process to build a more resilient and reliable system. By proactively identifying and addressing inconsistencies, we can develop smarter validation mechanisms, automate corrective actions, and refine data synchronization techniques. This will not only streamline day-to-day operations but also future-proof our infrastructure against evolving challenges. Ultimately, our goal is to ensure smoother business processes, enhance system stability, and solidify stronger, long-lasting customer relationships.

Next steps

Check out our Developer Center to explore robust APIs and powerful toolkits to help you customize your subscription marketplace. Or launch your marketplace with the AppDirect platform today!

Related Articles

Technology Insights

7 Steps to Replacing a Message Broker in a Distributed System

What do you do when you need to improve overall throughput and performance of your platform?

Technology Insights

Don’t Let Microservice Data Segregation Mess With Your Customers’ Search Experience

Is your software system built on a monolithic or microservice architecture? If it’s the latter, how do you solve the problem of joining data from multiple services while running search queries?By Adam Demjen / AppDirect / April 1, 2022

Technology Insights

Platform Insights: Balancing Agility and Efficiency with Confluent's Kafka Data Streaming

Discover how DevOps and the application of platforms like Confluent's Kafka Data Streaming have transformed application development and growth at AppDirect. Learn about our strategic pillars that impact customer outcomes, our journey with Kafka systems, and future AI innovations.By Rebecca Muhlenkort / AppDirect / February 13, 2025